Generation: Drawing with Text

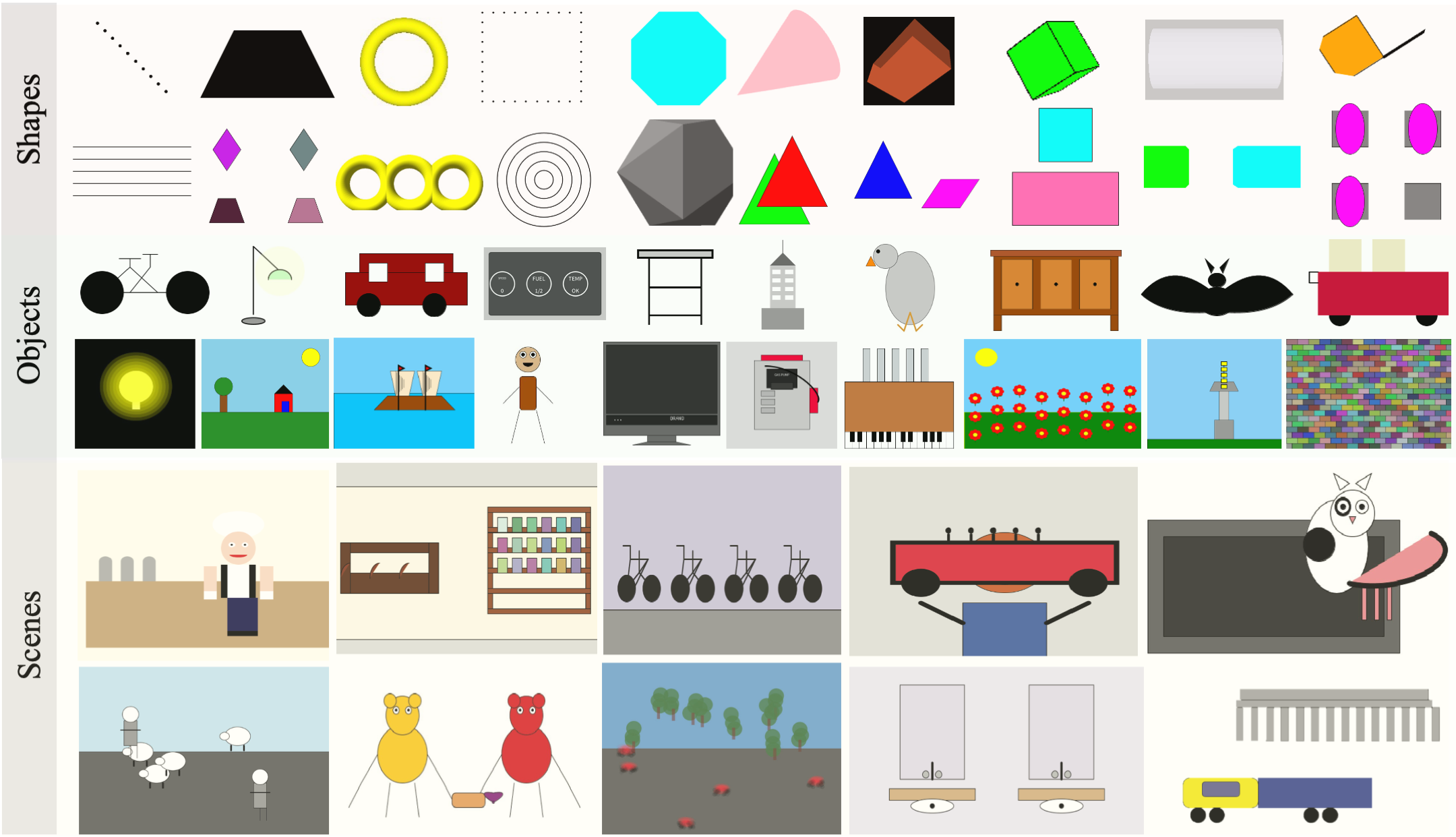

We test LLM's abilities to generate visual concepts of increasing complexity via a textual prompt → code → image procedure, and find that LLMs can visualize real-world concepts from across the visual hierarchy. LLMs are capable of generating non-trivial visual compositions; the model composes two unrelated concepts (“car shaped cake”), generates visual phenomena (“blurred image”), and manages to correctly interpret spatial relations (e.g. “a row of bicycles” arranged horizontally).

Textual feedback: Correcting with Text

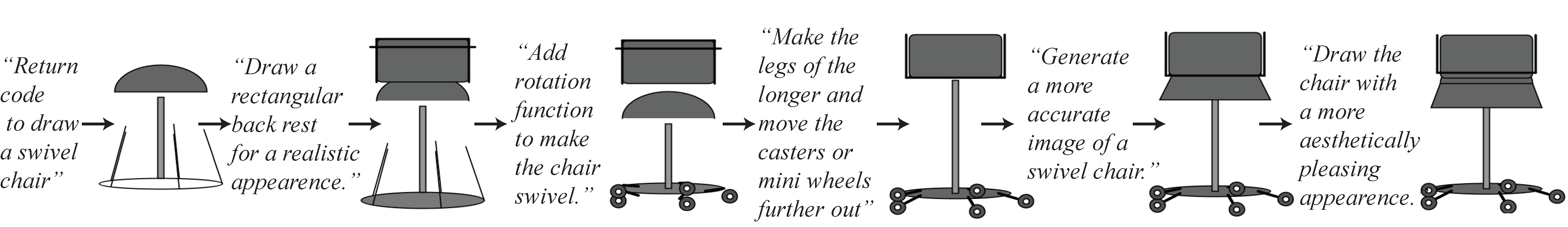

We demonstrate that the visual generation competence of a language model can be improved using text-based corrections. We do this by closing the feedback loop between the LLM and itself. Here, we first use the language model to generate code illustrating a concept. Following that, the model is repeatedly called by conditioning its generation on its previously generated code and prompted to ``improve its generated code''. We find that making such iterative calls to the model results in improved visual depictions.

Learning a Vision System from Text

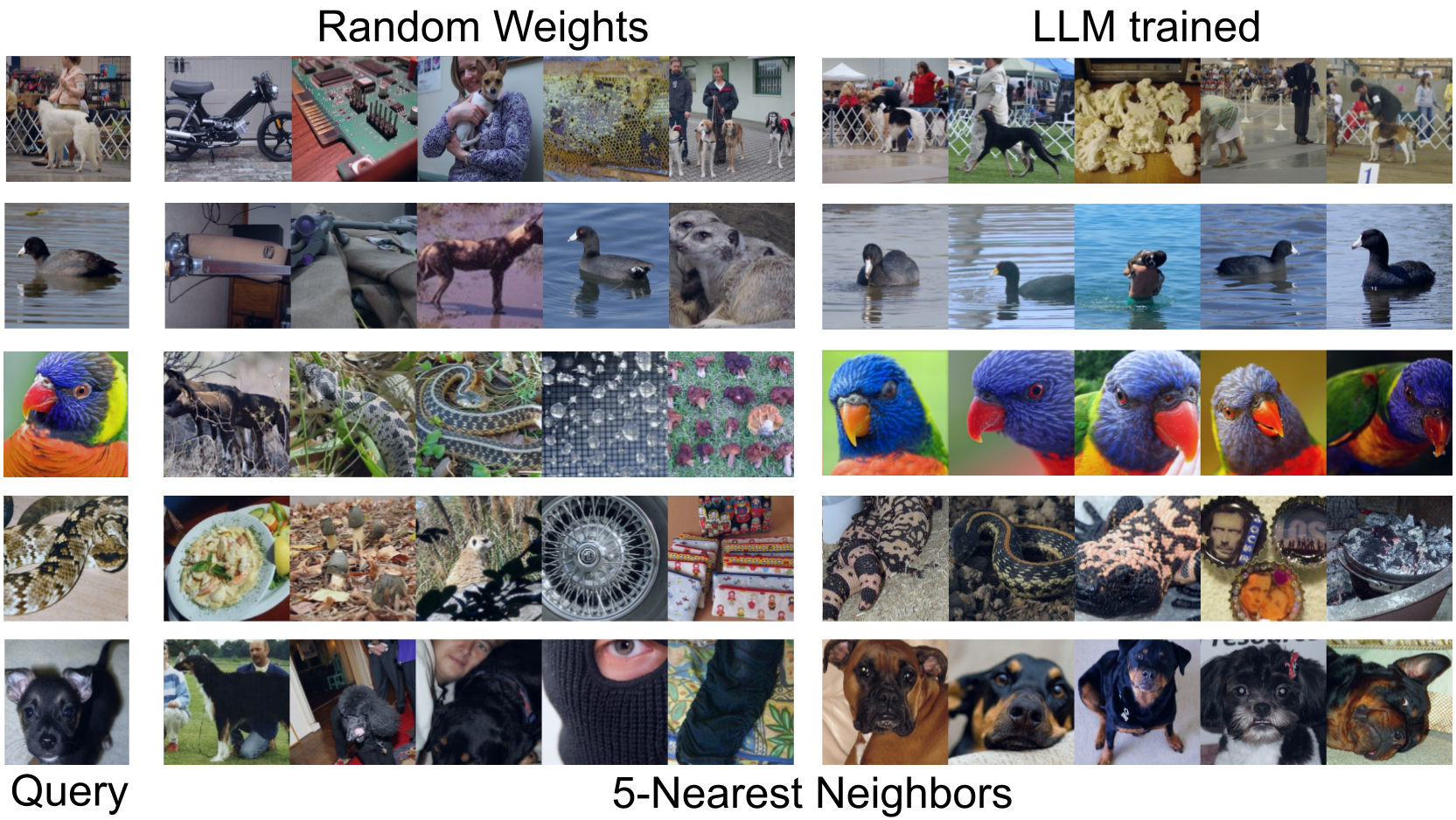

We study if LLM-generated images could serve as a data source for pre-training vision models and compare them to synthetically generated and natural images. We show it is possible for models trained entirely on procedurally generated data from LLMs to perform semantic judgments on natural images despite never having seen one before.

BibTeX

@inproceedings{sharma2024vision,

title={A Vision Check-up for Language Models},

author={Sharma, Pratyusha and Rott Shaham, Tamar and Baradad, Manel and Fu, Stephanie and Rodriguez-Munoz, Adrian and Duggal, Shivam and Isola, Phillip and Torralba, Antonio},

booktitle={arXiv preprint}

year={2024}

}